How I Learned to Stop Worrying and Love the Edu-Tech Grift

AI is here to save education—just sign here, wire the funds, and forget the children.

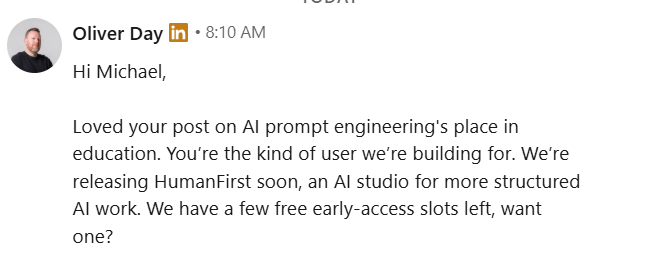

The solicitations come daily now, like spam filtered through the smirking mouth of Mammon: “Harness the Power of AI to Revolutionize Your Learning Environment.” “Transform Student Outcomes with Adaptive Intelligence.” “Let GPT Be Your New Faculty.”

Wrapped in cheerful UX palettes and buzzword confetti, these are not invitations to inquiry but harbingers of a slow-motion heist, an epic fleecing of the public mind. It’s the great AI-educational gold rush, wherein meaning is strip-mined and packaged for resale as “efficiency gains.”

It is difficult to trace the exact moment when education became a feeding trough for venture capitalists in Patagonia vests. Somewhere between Silicon Valley’s discovery of “edtech” as a market vertical and ChatGPT’s sudden deification in faculty lounges, a certain class of futurist hucksters smelled blood in the pedagogical water. And oh, how they gathered. Not educators, mind you, not even erstwhile pedagogues in retreat from the classroom, but speculators, consultants, pitch-deck visionaries fluent in disruption and agile enough to pivot from crypto to classroom with nothing more than a new Canva template.

These are the people who believe “Plato was cool, but what if he had a chatbot.” Who nod solemnly at slides titled From Socrates to Synthetics: Reimagining Inquiry in the Age of AI. Who tout things like “emotional learning dashboards” and “sentiment analysis for formative assessment,” blissfully unaware that the students they claim to serve can barely form a coherent paragraph or sit still for five minutes without checking TikTok. The techno-evangelists peddle “solutions” to problems they do not understand, in an institution they do not respect, for a clientele (read: schools) whose desperation is only matched by its susceptibility to buzzwords.

This is not innovation. This is looting.

What they’re selling—what they say they’re selling—is nothing less than the salvation of learning itself, “democratized access,” “personalized instruction,” “scalable wisdom.” What they’re actually selling is software: subscription-based, proprietary, impressively beta-tested and disturbingly opaque. It is not designed to form minds but to collect data, to optimize workflow, to identify “pain points” and plug them with algorithmic paste. It is the seamless interface standing in for the slow agony of intellectual labor.

And schools—beleaguered, underfunded, philosophically unmoored—are buying.

They are buying AI tutors that don't understand irony. They are buying essay graders that cannot detect argument. They are buying language models trained on the detritus of the internet and calling it "curriculum enhancement." And in doing so, they are not just squandering resources. They are abdicating responsibility, turning over the sacred charge of forming young souls to the indifferent logic of stochastic parrots. (No offense to parrots.)

Behind it all is the scent of a familiar American enterprise: the commodification of crisis. AI is merely the latest pretext. The old forms—textbooks, tests, Common Core, SEL, gamification, flipped classrooms—have been monetized, wrung out, and discarded. Now the venture crowd circles like buzzards with ChatGPT integrations and AI dashboards, promising administrators salvation in exchange for a three-year license agreement and “full onboarding support.”

And the irony, thick as a Kafka fog, is that the people making the most money off "transforming education" are the least educated in anything that matters. They cannot quote Aristotle (and certainly do not understand him). They do not read history. They have never led a classroom or borne the metaphysical weight of trying to explain, to real, squirming, skeptical human beings, why anything is worth knowing.

But they know how to raise capital.

They know how to demo.

They know how to make a superintendent (yours truly excepted) feel like he’s standing at the bleeding edge of progress when what he’s really doing is handing over the keys to the kingdom so his district can boast a marginal bump in “student engagement metrics.”

In another era, we might have called this what it is: a racket. A long con. The reduction of education to a service layer on top of a software product.

Now it is called "future-ready."

And in this future there will be no classrooms, only content modules; no teachers, only facilitators; no thought, only frictionless interaction. The aim is not wisdom. It is user retention.

To resist, then, is to refuse the doctrine that efficiency is the highest virtue, that learning is best when frictionless, that students are users and teachers are systems integrators. It is to insist that a child’s mind is not a market, and that the purpose of education cannot be aligned with quarterly earnings reports.

Until then, the solicitations will keep coming.

And each one will remind us: the train is leaving the station. All aboard the algorithmic express. Next stop: nowhere.

Michael S. Rose, a leader in the classical education movement, is author of The Art of Being Human, Ugly As Sin and other books. His articles have appeared in dozens of publications including The Wall Street Journal, Epoch Times, New York Newsday, National Review, and The Dallas Morning News.

Thanks Dad for sharing this one...

Michael Rose is spot on with this - I could argue that all of AI, not just AI education is fundamentally a grift. But let me add: they have to justify those valuations, unfortunately. So they gonna keep grifting.

These AI companies raised massive funding at sky-high valuations based on promises of "transforming everything." Now they need revenue to justify those numbers, so they're aggressively pushing half-baked products into schools and claiming every update is the greatest thing and there are plenty of people online happy to push the narative.

School administrators are often non-technical and overwhelmed, making them perfect targets for flashy demos that promise to solve all their problems with one purchase. It's easier to buy a "solution" than address systemic issues like funding or teacher retention.

Many of these companies aren't even trying to make money from the software itself. They'll likely make more money from student data for future monetization, making schools the product rather than the customer.

Remember when tablets were going to revolutionize learning? VCs throw money at "disrupting education," founders pivot from other failed startups, and schools get stuck with expensive tools that gather dust.

Now schools are shifting AWAY from screens because kids can't focus on basic tasks - and we want to introduce tools that eliminate critical thinking entirely?

The people building these "intelligent" systems often can't solve basic educational problems like student engagement or critical thinking. They just want the $$$$. They're just automating the easy parts while ignoring what actually matters - the slow, difficult work of forming minds that Rose talks about here.

Michael, thank you for this insight. Unfortunately, while you, the Pope, and a lot of other really smart people see that AI is a potential threat to human integrity, the people and incentives that drive have no incentive to use ethics, logic, or application of any metric outside of the bottom line in charting their course. Like so many other modern scientific "advancements" AI's advocates insist on applying it to everything, even when it does harm (intellectual, material or moral), or provides no discernible good to individuals or society. Those of us who are not fully on board are considered luddites or "deniers."